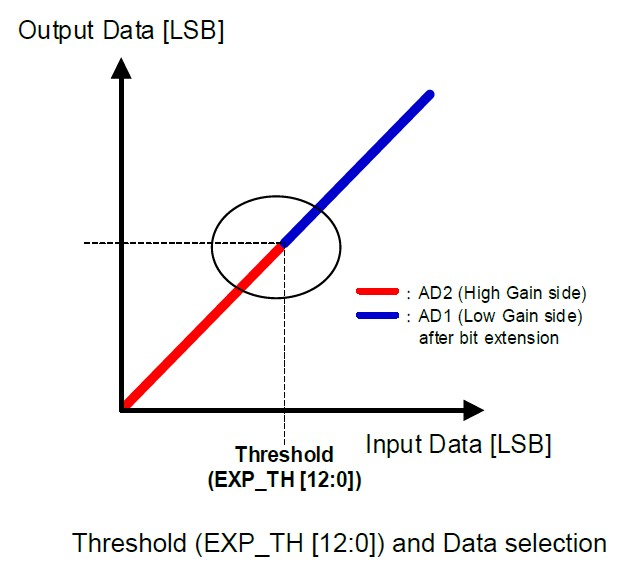

GPU HDR Processing for SONY Pregius Image SensorsThe fourth generation of SONY Pregious image sensors (IMX530, IMX531, IMX532, IMX532, IMX535, IMX536, IMX537, IMX487) is capable of working in HDR mode. That mode is called "Dual ADC" (Dual Gain) which means that two raw frames are originated from the same 12-bit raw image which is digitized via two ADCs with different analog gains. If the ratio of these gains is around 24 dB, one can get one 16-bit raw image from two 12-bit raw frames with different gains. This is actually the main idea of HDR for these image sensors - how to get extended dynamic range up to 16 bits from two 12-bit raw frames with the same exposure and with different analog gains. That method guarantees that both frames have been exposured at the same time and they are not spatially shifted. That Dual ADC feature was originally introduced at the third generation on SONY Pregius image sensors, but HDR processing had to be implemented outside the image sensor. The latest version of that HDR feature is done inside the image sensor which makes it more convenient to work with. Dual ADC mode with on-sensor combination (combined mode) is applicable for high speed sensors only.

In the Dual ADC mode we need to specify some parameters for the image sensor. There are two ways of getting the extended dynamic range from SONY Pregius image sensors:

Apart from that, there are two other options:

Dual Gain mode parameteres for image merge

Dual Gain mode parameteres for HDR

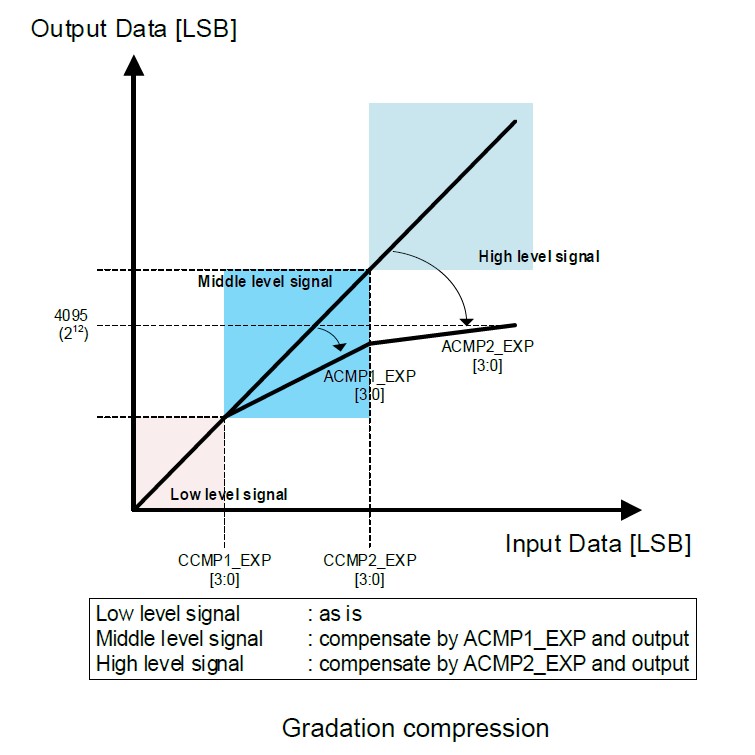

Below is the picture with detailed info concerning PWL curve which is applied after image merge, and it's done inside the image sensor. We can see how gradation compression is implemented at the image sensor.

This is an example of real parameters for Dual ADC mode for SONY IMX532 image sensor

For further testing we will capture frames from IMX532 image sensor at XIMEA camera MC161CG-SY-UB-HDR with exactly the same parameters of Dual ADC mode. If we compare images with gain ratio 16 (High gain is 16 times greater than Low gain) and exposure ratio 1/16 (long exposure for Low gain and short exposure for High gain), then we clearly see that images are alike, but High gain image has the following two problems: it has more noise and more hot pixels due to strong analog signal amplification. These issues should be taken into account. Apart from the standard Dual ADC combined mode, there is a quite popular approach which could bring good results with minimum efforts: we can use just Low gain image and apply custom tone mapping instead of PWL curve. In that case dynamic range is less, but that image could have less noise in comparison with images from the combined mode. Why do we need to apply our own HDR image processing?It makes sense if on-sensor HDR processing in Dual ADC mode could be improved. That could be the way of getting better image quality due to implementation of more sofisticated algorithms for image merge and tone mapping. GPU-based processing is usually very fast, so we could still be able to process image series with HDR support in realtime, which is a must for camera applications. HDR image processing pipeline on NVIDIA GPUWe've implemented image processing pipeline on NVIDIA GPU for Dual ADC frames from SONY Pregius image sensors. Actually we've extended our standard pipeline to work with such HDR images. We can process on NVIDA GPU any frames from SONY image sensors in the HDR mode: one 12-bit HDR raw image (combined mode) or two 12-bit raw frames (non-combined mode). Our result could be better not only due to our merge and tone mapping procedures, but also due to high quality debayering which also influences on the quality of processed images. Why we use GPU? This is the key to get much higher performance and image quality which can't be achieved on the CPU. Low gain image processingAs we've already mentioned, this is the simplest method which is widely accepted and it's actually the same as a switched-off Dual ADC mode. Low gain 12-bit raw image has less dynamic range, but it also has less noise, so we can apply either 1D LUT or more complicated tone mapping algorithm to that 12-bit raw image to get better results in comparison with combined 12-bit HDR image which we can get directly from SONY image sensor. This is a brief info about the pipeline:

Fig.1. Low gain image processing for IMX532 Image processing at the Combined modeThough we can get ready 12-bit raw HDR image from SONY image sensor at Dual ADC mode, there is still a way to improve the image quality. We can apply our own tone mapping to make it better. That's what we've done and the results are consistently better. This is a brief info about the pipeline:

Fig.2. SONY Dual ADC combined mode image processing for IMX532 with a custom tone mapping Low gain + High gain (non-combined) image processingTo get both raw frames from SONY image sensor, we need to send them to a PC via camera interface. It could cause a problem for interface bandwidth and for some cameras it could be a must to decrease frame rate to cope with camera bandwidth limitations. If we use PCIe, Coax or 10/25/50-GigE cameras, then it could be possible to send both raw images at realtime without frame drops. As soon as we get two raw frames (Low gain and High gain) for processing, we need to start from preprocessing, then to merge them into one 16-bit linear image and to apply tone mapping algorithm. Usually good tone mapping algorithms are more complicated than just a PWL curve, so we can get better results, though it definitely takes much more time. To solve that issue in a fast way, high performance GPU-based image processing could be the best approach. That's exactly what we've done and we can get better image quality and higher dynamic range in comparison with combined HDR image from SONY and with processed Low gain image as well. HDR workflow for Dual ADC non-combined image processing on GPU

In that workflow the most important modules are merge, global/local tone mapping and demosaicing. We've implemented that image processing pipeline with Fastvideo SDK which is running very fast on NVIDIA GPU. Fig.3. SONY Dual ADC non-combined (two-image) processing for IMX532 Resume for Dual ADC mode on GPU

We believe that the best results for image quality could be achived in the following modes:

If we are working in the non-combined mode, then we can get good image quality, but camera bandwith limitation and processing time could be a problem. If we are working with the results of the combined mode, image quality is comparable, the processing pipeline is less complicated (the performance is better), and we need less bandwidth, so it could be recommended for most use cases. With a proper GPU, image processing could be done in realtime at the max fps. The above frames were captured from SONY IMX532 image sensor at Dual ADC mode. The same approach is applicable to all high speed SONY Pregius image sensors of the 4th generation which are capable of working at Dual ADC combined mode as well. Processing benchmarks on Jetson AGX Xavier and GeForce RTX 2080TI in the combined modeWe've done time measurements for kernel times to evaluate the performance of the solution in the combined mode. This is the way to get high dynamic range and very good image quality, so the knowledge about performance could be valuable. Below we publish timings for several image processing modules because full pipeline could be different in general case. Table 1. GPU kernel time in ms for IMX532 raw frame processing in the combined mode (5328×3040, bayer, 12-bit)

This is just the part of the full image processing pipeline and this is to show a level of how fast it could be on the GPU. References |