Jetson image processing for camera applicationsJetson hardware is absolutely unique solution from NVIDIA. This is essentially a mini PC with extremely powerful and versatile hardware. Apart from ARM processor it has a sophisticated high performance GPU with CUDA cores, Tensor cores (on AGX Xavier), software for CPU/GPU and AI.

Below you can see an example of how to build a camera system on Jetson. This is an important task if you want to create realtime solution for mobile imaging application. With a thoughtful design, one can even implement a multicamera system on just a single Jetson, and some NVIDIA partners showcase that this is in fact achievable. How image processing could be done on NVIDIA Jetson?

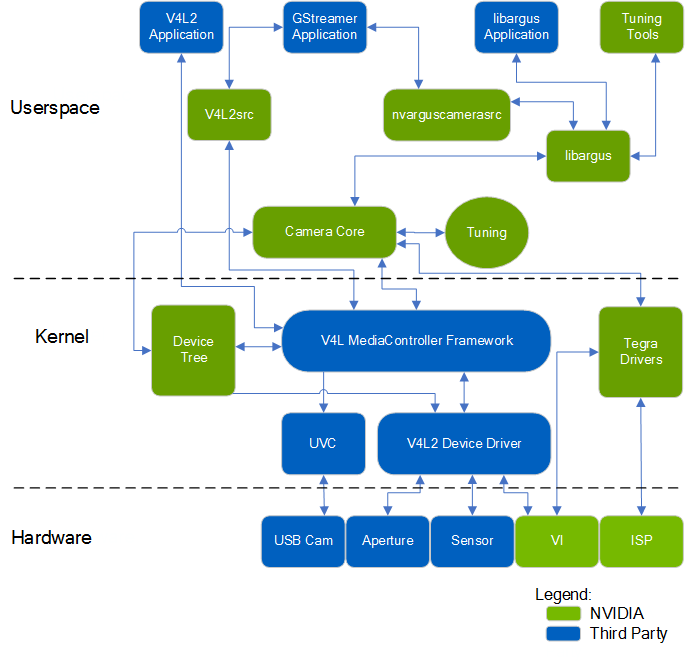

Here we consider just ISP and CUDA-based image processing pipelines to describe how the task could be solved, which image processing algorithms could be utilized, etc. For the beginning we consider NVIDIA camera architecture. Camera Architecture StackThe NVIDIA camera software architecture includes NVIDIA components for ease of development and customization:

Fig.1. Diagram from Development Guide for NVIDIA Tegra Linux Driver Package (31.1 Release, Nov.2018) NVIDIA Components of the camera architecture

NVIDIA provides OV5693 Bayer sensor as a sample and tunes this sensor for the Jetson platform. The drive code, based on the media controller framework, is available at ./kernel/nvidia/drivers/media/i2c/ov5693.c, NVIDIA further offers additional sensor support for BSP software releases. Developers must work with NVIDIA certified camera partners for any Bayer sensor and tuning support. The work involved includes:

These tools and operating mechanisms are NOT part of the public Jetson Embedded Platform (JEP) Board Support Package release. For more information on sensor driver development, see the NVIDIA V4L2 Sensor Driver Programming Guide. NVIDIA Jetson includes internal hardware-based solution (ISP) which was created for realtime camera applications. To control these features on Jetson hardware, there is libargus library. Camera application API libargus offers:

RAW output CSI cameras needing ISP can be used with either libargus or GStreamer plugin. In either case, the V4L2 media-controller sensor driver API is used. Sensor driver API (V4L2 API) enables:

V4L2 for encode opens up many features like bit rate control, quality presets, low latency encode, temporal tradeoff, motion vector maps, and more. Libargus library features for Jetson ISP

To summarize, ISP is a fixed-function processing block which can be configured through the Argus API, Linux drivers, or the Technical Reference Manual which contains register information for particular Jetson. All information about utilized algorithms (AF, AE, bayer filter, resize) in libargus is closed and user needs to test them to evaluate quality and performance. ISP libargus is a hardware-based solution from NVIDIA for image processing on Jetson and it was done for mobile camera applications with high performance, moderate quality and low latency. How to choose the right cameraTo be able to utilize ISP, we need a camera with CSI interface. NVIDIA partner - Leopard Imaging company is manufacturing many cameras with that interface and you can choose a model according to your requirements. CSI interface is the key feature to send data from a camera to Jetson with a possibility to utilize ISP libargus for image processing. If we have a camera without CSI support (for example, GigE, USB-3.x, CameraLink, Coax, 10-GigE, Thunderbolt, PCIE camera), we need to create CSI driver to be able to work with Jetson ISP. Even if we don't have CSI driver, there is still a way to connect your camera to Jetson. You just need to utilize proper carrier board with correct hardware output. Usually this is either USB-3.x or PCIE. There is a wide choice of USB3 cameras on the market and you can easily choose such a camera or carrier board that you need. For example, from NVIDIA partner - XIMEA GmbH.

Fig.2. XIMEA carrier board for NVIDIA Jetson TX1/TX2 To work further with the camera, you need camera driver for L4T and ARM processor - this is minimum requirement to connect your camera to Jetson via carrier board. However, keep in mind that in this case ISP is not available. Next part deals with such a situation. How to work with non-CSI cameras on JetsonLet's assume that we've already connected non-CSI camera to Jetson and we can send data from the camera to system memory on Jetson. Now we can't access Jetson ISP and we need to consider other ways of image processing. The fastest solution is to utilize Fastvideo SDK for Jetson GPUs. That SDK actually exists for Jetson Nano, TK1, TX1, TX2, TX2i, Xavier NX and AGX Xavier. You just need to send data to GPU memory and to create full image processing pipeline on CUDA. This is the way to keep CPU free and to ensure fast processing due to excellent performance of mobile Jetson GPU on CUDA. Based on that approach user can create multicamera systems on Jetson with Fastvideo SDK together with USB-3.x or PCIE cameras. For more info about realtime Jetson applications with multiple cameras you can have a look the site of NVIDIA partner XIMEA, which is manufacturing high quality cameras for machine vision, industrial and scientific applications.

Fig.3. NVIDIA Jetson multiple camera system on TX1/TX2 carrier board from XIMEA Image processing on Jetson with Fastvideo SDKFastvideo SDK is intended for camera applications and it has wide choice of features for realtime raw image processing on GPU. That SDK also exists for NVIDIA GeForce/Quadro/Tesla GPUs and consists of high quality algorithms which require significant computational power. This is the key difference in comparison with any hardware-based solution. Usually ISP/FPGA/ASIC image processing modules offer low latency and high performance, but because of hardware restrictions, utilized algorithms are relatively simple and have moderate image quality. Apart from image processing modules, Fastvideo SDK has high speed compression solutions: JPEG (8/12 bits), JPEG2000 (8-16 bits), Bayer (8/12 bits) codecs which are implemented on GPU. These codecs are working on CUDA and they were heavily tested, so they are reliable and very fast. For majority of camera applications, 12 bits per pixel is a standard bit depth and it makes sense to store compressed images at least in 12-bit format or even at 16-bit. Full image processing pipeline on Fastvideo SDK is done at 16-bit precision, but some modules that require better precision are implemented with float.

Fig.4. Image processing workflow on CUDA at Fastvideo SDK for camera applications To check quality and performance of raw image processing with Fastvideo SDK, user can download GUI application for Windows which is called Fast CinemaDNG Processor. The software is fully based on Fastvideo SDK and it could be downloaded from www.fastcinemadng.com together with sample image series in DNG format. That application has benchmarks window to check time measurements for each stage of image processing pipeline on GPU. High-resolution multicamera system for UAV Aerial MappingApplication: 5K vision system for Long Distance Remote UAV Manufacturer: MRTech company Cameras

Hardware

Jetson GPU image processing

Fig.5. NVIDIA Jetson TX2 with XIMEA MX200CG-CM (20 MPix) and two MX031CG-SY (3.1 MPix) cameras. More information about MRTech solutions for Jetson image processing you can find here. AI imaging applications on JetsonWith the arrival of AI solutions, the following task needs to be solved: how to prepare high quality input data for such systems? Usually we get images from cameras in realtime and if we need high quality images, then choosing a high resolution color camera with bayer pattern is justified. Next we need to implement fast raw processing and after that we will be able to feed our AI solution with good pictures in realtime. The latest Jetson AGX Xavier has high performance Tensor cores for AI applications and these cores are ready to receive images from CUDA software. Thus we can send data directly from CUDA cores to Tensor cores to solve the whole task very fast. Links

Other blog posts from Fastvideo about Jetson hardware and software

|